This post continues our discussion on Future Drones

Mission autonomy evolved parallel to the automation of piloting functions. Such capabilities are derived from mission payloads that become smarter to collect, process and fuse data with other sensor streams and store it on the platform. Persistent Wide Area Surveillance (PWAS) is an example of those capabilities that help military and security forces watch and control wide areas over a long time, using minimal intervention of forces.

Deployed on surveillance missions, drones ‘carpet’ wide areas to provide mission analysts streams of data from electronic surveillance, radar, and imagery, in multi- and hyperspectral sensors. Analysts use this data to detect ‘signatures’ and ‘anomalies’, indicating the presence of potential targets. To support such missions, drones are designed to carry multiple payloads, each gathering data in a specific discipline and spectral range. Often, the volume of data being collected is too large to transfer to the ground station using standard datalinks it in real-time. Therefore, requiring solutions to provide analyst access to information on board the drone itself. Such capabilities enable analysts to tap the most important and relevant events, investigate signature of objects and tracks in different spectral bands, mapping results geographically to explore relevant events in the present as well as the past, providing actionable information for intelligence gathering and time-sensitive targeting processes.

RAFAEL’s Reccelite aerial reconnaissance pod was developed for such missions. While the drone and pod sensors follow a pre-planned mission, they can also be updated in flight, changing the payload steering sequence, and camera direction to deliver the most effective coverage of specific locations and targets during the flight. The pod uses gimballed, high-resolution aerial reconnaissance cameras and integral inertial measurement system (INS), to automatically cover a wide area with high-resolution images, maintaining revisit rate to provide persistent surveillance of specific areas of interest. The pod has an integral mass storage and wideband data link designed to transfer high-resolution images to the ground control center, where images are processed and displayed to analysts.

For many years radar provided the primary sensor for wide area surveillance; among these, Synthetic Aperture Radars (SAR) provide a useful sensor that functions well in day and night as well as in adverse weather and limited visibility conditions. SAR also offers effective detection of objects hidden under camouflage or buried underground. In the past SAR systems were carried by large aircraft, while smaller systems stored in pods required dedicated fighter aircraft to go to battle.

Today, smaller systems are deployed on large Medium Altitude, Long Endurance (MALE) UAS, and even smaller ones, fitted into compact underwing pods, are used with tactical UAS, enabling such small platforms to carry multiple sensors on a mission. SAR relies on a synthetic aperture, synthesized over distance and time, as opposed to EO sensors that use real, physical aperture. This means that, although the physical footprint of the radar is small, it collects imagery with a high resolution as though it was much larger. As a microwave sensor SAR ‘illuminates’ the area under surveillance, thus can operate in day and night, the wavelength selected for operation penetrates atmospheric obstructions that scatter and diffuse visible light, such as fog, rain, haze or dust, to gain effective all-weather visibility. With each pixel in the SAR image represents data about that particular point of the target location, different processing can be applied to exploit this information, automatically generating complex services such as change detection (CCD), foliage penetration (FOPEN) and moving target indication (MTI).

MTI is an advanced capability of SAR radars, processing the same microwave signals generated by SAR with different algorithms to detect and track moving targets. Analyzing and comparing those signals for their doppler effect, multiple moving targets can be clearly displayed over a stationary background. This function enables operators to monitor large areas to instantly detect activities that can be associated with such movement.

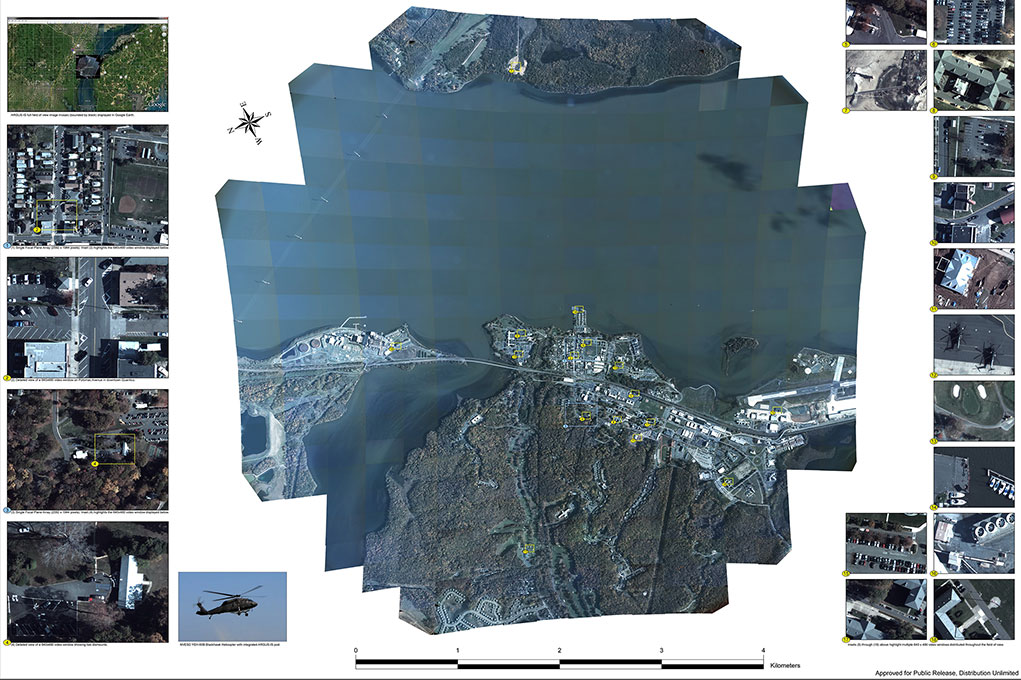

Other mission payloads like the Gorgon Stare were developed by Sierra Nevada Corp. (SNC) specifically for persistent wide area surveillance (PWAS). The system became operational with the US Air Force in 2011 on the MQ-9 Reaper drone, each carrying a twin-pod PWAS system. In its first iteration, the system covered an area of about 16 square kilometers. By 2014 the second generation was fielded with an improved sensor developed by BAE Systems under the DARPA ARGUS-IR program. This sensor array consists 368 cameras (daylight and infrared) to create an image of 1.8 billion pixels. In addition, four telescopic cameras enabling users to zoom in on objects of interest. The new version covers an area of 64 square kilometers with twice the resolution of the original system, both in day and night time.

At the time, commercial systems were not available to meet the data processing and storage at the small size and low power consumption suitable for unmanned platforms. To handle to greater processing tasks involved with the new sensor BAE Systems developed an advanced processor capable of parallel processing of hundreds of individual cameras. To store this data the company built an ‘airborne big-data storage system’. These are the TeraStar systems that have since evolved into commercial products offering scalable, ultra-dense data storage systems that reduce the overall cost per terabyte of storage capacity.

Unlike the podded Gorgon Stare, Elbit Systems SkEye is configured as a standard UAV payload, that can be integrated with standard UAVs such as the company’s Hermes 450, or 900 drones, as well as on various manned or unmanned aircraft. Cruising at the drone’s operational altitude, SkEye’s gigapixel payload integrates multiple staring cameras to cover an area of 80 square kilometers. As each sensor uses a high megapixel camera, can obtain close-up images of parts of the area, in real-time or from historic SkEye records. In addition, the drone can carry standard UAV payloads to obtain higher magnification views of specific areas.

In addition to EO systems-wide area surveillance capabilities consist of Synthetic Aperture Radar (SAR) or communications surveillance (COMINT), accessible by users in real-time or ‘backtrack’ to view the same point of interest over time, to gain a better understanding of patterns of life or target behavior.

An evolving capability added to persistent surveillance is the Dismount Detection Radar (DDR), a pod-mounted sensor that functions similar to a ground surveillance radar, mounted on UAVs and covering a large area. Such sensors provide a UAV equipped with gimballed EO sensors the situation awareness that would attract the attention of payload operator to suspicious movements in their area of responsibility. It can also help track routine movements and assess patterns of life to enable surveillance systems to learn the mission area and avoid false alarms. Such capabilities could also have important uses in search and rescue and homeland security applications.

Drones can be equipped with wide band data links to send high definition sensor data but are not designed to support simultaneous, multiple streams, nor can they deliver the processing power, energy and cooling to facilitate such ‘flying data warehouse’ services. Some solutions already integrate the hardware necessary to provide such services. For example, SkEye architecture maintains all sensor data on the aircraft, providing mission analysts access to real-time and stored image data from their database. After the mission, historical data is downloaded and stored at the mission control enabling users to access historical mission records on demand.

To support similar capabilities the US Air Force contracted SRC to deliver the ‘Agile Condor’ high performance embedded computing architecture that enables high-performance embedded computing (HPEC) onboard remotely piloted aircraft (RPA). Each pod has an internal chassis carrying a supercomputer built of standard commercial off-the-shelf (COTS) units including single-board computers (SBCs), graphics processing units (GPUs), Field-Programmable Gate Arrays (FPGAs), and solid-state storage devices (SSDs). Currently, the chassis utilizes a modular, distributed network of processors and co-processors based on open industry standards to deliver more than 7.5 teraflops of computational power at better than 15 gigaflops per watt. The pod enclosure is based on an existing, flight-certified design specifically modified to use ambient air cooling for thermal management of the embedded electronics. This upgradeable architecture supports fast technology refresh, which decreases life-cycle costs, reduces system downtime, and assures continued usefulness of the system.

Agile Condor can also enhance the drone’s situational awareness using ‘neuromorphic computing’ – a bio-inspired processing scheme that analyzes information similar to the human brain, improving situational awareness.

According to the SRC, the Agile Condor developer, such systems will become mission systems managers in the future, processing data from multiple sensors on board and use machine learning to selectively queue sensors for particular scenarios. Instead of surveying large areas with all sensors, the drone would conserve power by using only the sensors best equipped to detect specific anomalies over large areas. When a point of interest is spotted, the system will cue other sensors, like a camera, to collect more information and notify the human mission analyst for further review and inspection. This selective “detect and notify” process frees up bandwidth and increases transfer speeds while reducing latency between data collection and analysis. SRC is scheduled to deliver the first Agile Condor pod to the Air Force by the end of 2017.

More in the ‘Future Autonomous Drones‘ review:

- Step I: Minimizing Dependence on Human Skills

- Step II: Mission Systems’ Automation

- Step III: Higher Autonomy Becoming Affordable

- Step IV: Teams, Squadrons, and Swarms of Bots

More in the ‘Future Drones’ series: